Intro to Tensorflow - MINST

rpi.analyticsdojo.com

Adopted from Hands-On Machine Learning with Scikit-Learn and TensorFlow by Aurélien Géron.

Apache License Version 2.0, January 2004 http://www.apache.org/licenses/ TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

For full license see repository.

Chapter 10 – Introduction to Artificial Neural Networks

This notebook contains all the sample code and solutions to the exercices in chapter 10.

74. Setup#

First, let’s make sure this notebook works well in both python 2 and 3, import a few common modules, ensure MatplotLib plots figures inline and prepare a function to save the figures:

# Common imports

import numpy as np

import os

# to make this notebook's output stable across runs

def reset_graph(seed=42):

tf.reset_default_graph()

tf.set_random_seed(seed)

np.random.seed(seed)

# To plot pretty figures

%matplotlib inline

import matplotlib

import matplotlib.pyplot as plt

plt.rcParams['axes.labelsize'] = 14

plt.rcParams['xtick.labelsize'] = 12

plt.rcParams['ytick.labelsize'] = 12

# Where to save the figures

PROJECT_ROOT_DIR = "/home/jovyan/techfundamentals-fall2017-materials/classes/13-deep-learning"

def save_fig(fig_id, tight_layout=True):

path = os.path.join(PROJECT_ROOT_DIR, 'images', fig_id + ".png")

print("Saving figure", fig_id)

if tight_layout:

plt.tight_layout()

plt.savefig(path, format='png', dpi=300)

74.1. MNIST#

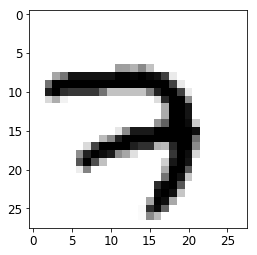

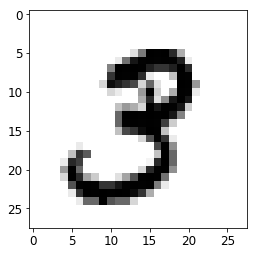

Very common machine learning library with goal to classify digits.

This example is using MNIST handwritten digits, which contains 55,000 examples for training and 10,000 examples for testing. The digits have been size-normalized and centered in a fixed-size image (28x28 pixels) with values from 0 to 1. For simplicity, each image has been flattened and converted to a 1-D numpy array of 784 features (28*28).

More info: http://yann.lecun.com/exdb/mnist/

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets("/tmp/data/")

X_train = mnist.train.images

X_test = mnist.test.images

y_train = mnist.train.labels.astype("int")

y_test = mnist.test.labels.astype("int")

print ("Training set: ", X_train.shape,"\nTest set: ", X_test.shape)

Extracting /tmp/data/train-images-idx3-ubyte.gz

Extracting /tmp/data/train-labels-idx1-ubyte.gz

Extracting /tmp/data/t10k-images-idx3-ubyte.gz

Extracting /tmp/data/t10k-labels-idx1-ubyte.gz

Training set: (55000, 784)

Test set: (10000, 784)

# List a few images and print the data to get a feel for it.

images = 2

for i in range(images):

#Reshape

x=np.reshape(X_train[i], [28, 28])

print(x)

plt.imshow(x, cmap=plt.get_cmap('gray_r'))

plt.show()

# print("Model prediction:", preds[i])

[[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0.38039219 0.37647063

0.3019608 0.46274513 0.2392157 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. ]

[ 0. 0. 0. 0.35294119 0.5411765 0.92156869

0.92156869 0.92156869 0.92156869 0.92156869 0.92156869 0.98431379

0.98431379 0.97254908 0.99607849 0.96078438 0.92156869 0.74509805

0.08235294 0. 0. 0. 0. 0. 0.

0. 0. 0. ]

[ 0. 0. 0.54901963 0.98431379 0.99607849 0.99607849

0.99607849 0.99607849 0.99607849 0.99607849 0.99607849 0.99607849

0.99607849 0.99607849 0.99607849 0.99607849 0.99607849 0.99607849

0.74117649 0.09019608 0. 0. 0. 0. 0.

0. 0. 0. ]

[ 0. 0. 0.88627458 0.99607849 0.81568635 0.78039223

0.78039223 0.78039223 0.78039223 0.54509807 0.2392157 0.2392157

0.2392157 0.2392157 0.2392157 0.50196081 0.8705883 0.99607849

0.99607849 0.74117649 0.08235294 0. 0. 0. 0.

0. 0. 0. ]

[ 0. 0. 0.14901961 0.32156864 0.0509804 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0.13333334 0.83529419 0.99607849 0.99607849

0.45098042 0. 0. 0. 0. 0. 0.

0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0.32941177 0.99607849 0.99607849

0.91764712 0. 0. 0. 0. 0. 0.

0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0.32941177 0.99607849 0.99607849

0.91764712 0. 0. 0. 0. 0. 0.

0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0.41568631 0.6156863 0.99607849 0.99607849

0.95294124 0.20000002 0. 0. 0. 0. 0.

0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0.09803922 0.45882356

0.89411771 0.89411771 0.89411771 0.99215692 0.99607849 0.99607849

0.99607849 0.99607849 0.94117653 0. 0. 0. 0.

0. 0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0.26666668 0.4666667 0.86274517 0.99607849

0.99607849 0.99607849 0.99607849 0.99607849 0.99607849 0.99607849

0.99607849 0.99607849 0.55686277 0. 0. 0. 0.

0. 0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0.14509805 0.73333335 0.99215692 0.99607849 0.99607849 0.99607849

0.87450987 0.80784321 0.80784321 0.29411766 0.26666668 0.84313732

0.99607849 0.99607849 0.45882356 0. 0. 0. 0.

0. 0. ]

[ 0. 0. 0. 0. 0. 0.

0.44313729 0.8588236 0.99607849 0.94901967 0.89019614 0.45098042

0.34901962 0.12156864 0. 0. 0. 0.

0.7843138 0.99607849 0.9450981 0.16078432 0. 0. 0.

0. 0. 0. ]

[ 0. 0. 0. 0. 0. 0.

0.66274512 0.99607849 0.6901961 0.24313727 0. 0. 0.

0. 0. 0. 0. 0.18823531 0.90588242

0.99607849 0.91764712 0. 0. 0. 0. 0.

0. 0. ]

[ 0. 0. 0. 0. 0. 0.

0.07058824 0.48627454 0. 0. 0. 0. 0.

0. 0. 0. 0. 0.32941177 0.99607849

0.99607849 0.65098041 0. 0. 0. 0. 0.

0. 0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0.54509807 0.99607849 0.9333334

0.22352943 0. 0. 0. 0. 0. 0.

0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0.82352948 0.98039222 0.99607849 0.65882355

0. 0. 0. 0. 0. 0. 0.

0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0.94901967 0.99607849 0.93725497 0.22352943

0. 0. 0. 0. 0. 0. 0.

0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0.34901962 0.98431379 0.9450981 0.33725491 0. 0.

0. 0. 0. 0. 0. 0. 0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0.01960784 0.80784321 0.96470594 0.6156863 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0.01568628 0.45882356 0.27058825 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. ]]

[[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0.12156864 0.51764709 0.99607849 0.99215692 0.99607849 0.83529419

0.32156864 0. 0. 0. 0. 0. 0.

0. 0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0.08235294 0.55686277

0.91372555 0.98823535 0.99215692 0.98823535 0.99215692 0.98823535

0.87450987 0.07843138 0. 0. 0. 0. 0.

0. 0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0.48235297 0.99607849 0.99215692

0.99607849 0.99215692 0.87843144 0.7960785 0.7960785 0.87450987

1. 0.83529419 0. 0. 0. 0. 0.

0. 0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0.7960785 0.99215692 0.98823535

0.99215692 0.83137262 0.07843138 0. 0. 0.2392157

0.99215692 0.98823535 0. 0. 0. 0. 0.

0. 0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0.16078432 0.95294124 0.87843144 0.7960785

0.71764708 0.16078432 0.59607846 0.11764707 0. 0. 1.

0.99215692 0.40000004 0. 0. 0. 0. 0.

0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0.15686275 0.07843138 0. 0.

0.40000004 0.99215692 0.19607845 0. 0.32156864 0.99215692

0.98823535 0.07843138 0. 0. 0. 0. 0.

0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0.32156864 0.83921576 0.12156864 0.44313729 0.91372555 0.99607849

0.91372555 0. 0. 0. 0. 0. 0.

0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0.24313727 0.40000004

0.32156864 0.16078432 0.99215692 0.90980399 0.99215692 0.98823535

0.91372555 0.19607845 0. 0. 0. 0. 0.

0. 0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0.59607846 0.99215692

0.99607849 0.99215692 0.99607849 0.99215692 0.99607849 0.91372555

0.48235297 0. 0. 0. 0. 0. 0.

0. 0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0.59607846 0.98823535

0.99215692 0.98823535 0.99215692 0.98823535 0.75294125 0.19607845

0. 0. 0. 0. 0. 0. 0.

0. 0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0.24313727 0.71764708

0.7960785 0.95294124 0.99607849 0.99215692 0.24313727 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0.15686275 0.67450982 0.98823535 0.7960785 0.07843138 0. 0.

0. 0. 0. 0. 0. 0. 0. ]

[ 0. 0. 0. 0. 0. 0.

0.08235294 0. 0. 0. 0. 0. 0.

0. 0. 0. 0.71764708 0.99607849 0.43921572

0. 0. 0. 0. 0. 0. 0.

0. 0. ]

[ 0. 0. 0. 0. 0. 0.24313727

0.7960785 0.63921571 0. 0. 0. 0. 0.

0. 0. 0. 0.2392157 0.99215692 0.59215689

0. 0. 0. 0. 0. 0. 0.

0. 0. ]

[ 0. 0. 0. 0. 0.08235294 0.83921576

0.75294125 0. 0. 0. 0. 0. 0.

0. 0. 0.04313726 0.83529419 0.99607849 0.59215689

0. 0. 0. 0. 0. 0. 0.

0. 0. ]

[ 0. 0. 0. 0. 0.40000004 0.99215692

0.59215689 0. 0. 0. 0. 0. 0.

0. 0.16078432 0.83529419 0.98823535 0.99215692 0.43529415

0. 0. 0. 0. 0. 0. 0.

0. 0. ]

[ 0. 0. 0. 0. 0.16078432 1.

0.83529419 0.36078432 0.20000002 0. 0. 0.12156864

0.36078432 0.67843139 0.99215692 0.99607849 0.99215692 0.55686277

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. ]

[ 0. 0. 0. 0. 0. 0.67450982

0.98823535 0.99215692 0.98823535 0.7960785 0.7960785 0.91372555

0.98823535 0.99215692 0.98823535 0.99215692 0.50980395 0.07843138

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. ]

[ 0. 0. 0. 0. 0. 0.08235294

0.7960785 1. 0.99215692 0.99607849 0.99215692 0.99607849

0.99215692 0.95686281 0.7960785 0.32156864 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. ]

[ 0. 0. 0. 0. 0. 0.

0.07843138 0.59215689 0.59215689 0.99215692 0.67058825 0.59215689

0.59215689 0.15686275 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. ]

[ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. ]]

74.2. TFLearn: Deep learning library featuring a higher-level API for TensorFlow#

TFlearn is a modular and transparent deep learning library built on top of Tensorflow.

It was designed to provide a higher-level API to TensorFlow in order to facilitate and speed-up experimentations

Fully transparent and compatible with Tensorflow

hidden_unitslist of hidden units per layer. All layers are fully connected. Ex. [64, 32] means first layer has 64 nodes and second one has 32.

import tensorflow as tf

config = tf.contrib.learn.RunConfig(tf_random_seed=42) # not shown in the config

feature_cols = tf.contrib.learn.infer_real_valued_columns_from_input(X_train)

# List of hidden units per layer. All layers are fully connected. Ex. [64, 32] means first layer has 64 nodes and second one has 32.

dnn_clf = tf.contrib.learn.DNNClassifier(hidden_units=[300,100], n_classes=10,

feature_columns=feature_cols, config=config)

dnn_clf = tf.contrib.learn.SKCompat(dnn_clf) # if TensorFlow >= 1.1

dnn_clf.fit(X_train, y_train, batch_size=50, steps=4000)

WARNING:tensorflow:Using temporary folder as model directory: /var/folders/2l/hz84cqd11f1_kgngygpjvbmh0000gn/T/tmp9ryubfoi

INFO:tensorflow:Using config: {'_task_id': 0, '_is_chief': True, '_save_checkpoints_steps': None, '_tf_config': gpu_options {

per_process_gpu_memory_fraction: 1

}

, '_keep_checkpoint_max': 5, '_save_summary_steps': 100, '_master': '', '_cluster_spec': <tensorflow.python.training.server_lib.ClusterSpec object at 0x11fc21ef0>, '_task_type': None, '_num_ps_replicas': 0, '_environment': 'local', '_evaluation_master': '', '_keep_checkpoint_every_n_hours': 10000, '_save_checkpoints_secs': 600, '_tf_random_seed': 42}

---------------------------------------------------------------------------

AttributeError Traceback (most recent call last)

<ipython-input-4-5b9939f7137a> in <module>()

5 dnn_clf = tf.contrib.learn.DNNClassifier(hidden_units=[300,100], n_classes=10,

6 feature_columns=feature_cols, config=config)

----> 7 dnn_clf = tf.contrib.learn.SKCompat(dnn_clf) # if TensorFlow >= 1.1

8 dnn_clf.fit(X_train, y_train, batch_size=50, steps=4000)

AttributeError: module 'tensorflow.contrib.learn' has no attribute 'SKCompat'

#We can use the sklearn version of metrics

from sklearn import metrics

y_pred = dnn_clf.predict(X_test)

#This calculates the accuracy.

print("Accuracy score: ", metrics.accuracy_score(y_test, y_pred['classes']) )

#Log Loss is a way of score classes probabilsitically

print("Logloss: ",metrics.log_loss(y_test, y_pred['probabilities']))

74.2.1. Tensorflow#

Direct access to Python API for Tensorflow will give more flexibility

Like earlier, we will define the structure and then run the session.

set placeholders

import tensorflow as tf

n_inputs = 28*28 # MNIST

n_hidden1 = 300 # hidden units in first layer.

n_hidden2 = 100

n_outputs = 10 # Classes of output variable.

#Placehoder

reset_graph()

X = tf.placeholder(tf.float32, shape=(None, n_inputs), name="X")

y = tf.placeholder(tf.int64, shape=(None), name="y")

def neuron_layer(X, n_neurons, name, activation=None):

with tf.name_scope(name):

n_inputs = int(X.get_shape()[1])

stddev = 2 / np.sqrt(n_inputs)

init = tf.truncated_normal((n_inputs, n_neurons), stddev=stddev)

W = tf.Variable(init, name="kernel")

b = tf.Variable(tf.zeros([n_neurons]), name="bias")

Z = tf.matmul(X, W) + b

if activation is not None:

return activation(Z)

else:

return Z

with tf.name_scope("dnn"):

hidden1 = neuron_layer(X, n_hidden1, name="hidden1", activation=tf.nn.relu)

hidden2 = neuron_layer(hidden1, n_hidden2, name="hidden2", activation=tf.nn.relu)

logits = neuron_layer(hidden2, n_outputs, name="outputs")

with tf.name_scope("loss"):

xentropy = tf.nn.sparse_softmax_cross_entropy_with_logits(labels=y, logits=logits)

loss = tf.reduce_mean(xentropy, name="loss")

learning_rate = 0.01

with tf.name_scope("train"):

optimizer = tf.train.GradientDescentOptimizer(learning_rate)

training_op = optimizer.minimize(loss)

with tf.name_scope("eval"):

correct = tf.nn.in_top_k(logits, y, 1)

accuracy = tf.reduce_mean(tf.cast(correct, tf.float32))

init = tf.global_variables_initializer()

saver = tf.train.Saver()

74.2.2. Running the Analysis over 40 Epocs#

40 passes through entire dataset.

n_epochs = 40

batch_size = 50

with tf.Session() as sess:

init.run()

for epoch in range(n_epochs):

for iteration in range(mnist.train.num_examples // batch_size):

X_batch, y_batch = mnist.train.next_batch(batch_size)

sess.run(training_op, feed_dict={X: X_batch, y: y_batch})

acc_train = accuracy.eval(feed_dict={X: X_batch, y: y_batch})

acc_test = accuracy.eval(feed_dict={X: mnist.test.images,

y: mnist.test.labels})

print("Epoc:", epoch, "Train accuracy:", acc_train, "Test accuracy:", acc_test)

save_path = saver.save(sess, "./my_model_final.ckpt")

Epoc: 0 Train accuracy: 0.94 Test accuracy: 0.9084

Epoc: 1 Train accuracy: 0.94 Test accuracy: 0.9289

Epoc: 2 Train accuracy: 0.96 Test accuracy: 0.9401

Epoc: 3 Train accuracy: 0.94 Test accuracy: 0.946

Epoc: 4 Train accuracy: 0.96 Test accuracy: 0.9501

Epoc: 5 Train accuracy: 0.94 Test accuracy: 0.9546

Epoc: 6 Train accuracy: 0.94 Test accuracy: 0.957

Epoc: 7 Train accuracy: 0.98 Test accuracy: 0.9582

Epoc: 8 Train accuracy: 0.98 Test accuracy: 0.9613

Epoc: 9 Train accuracy: 0.96 Test accuracy: 0.9649

Epoc: 10 Train accuracy: 0.96 Test accuracy: 0.9661

Epoc: 11 Train accuracy: 0.98 Test accuracy: 0.9662

Epoc: 12 Train accuracy: 0.94 Test accuracy: 0.9679

Epoc: 13 Train accuracy: 1.0 Test accuracy: 0.9693

Epoc: 14 Train accuracy: 0.96 Test accuracy: 0.969

Epoc: 15 Train accuracy: 1.0 Test accuracy: 0.9703

Epoc: 16 Train accuracy: 1.0 Test accuracy: 0.9717

Epoc: 17 Train accuracy: 1.0 Test accuracy: 0.9718

Epoc: 18 Train accuracy: 0.98 Test accuracy: 0.9718

Epoc: 19 Train accuracy: 1.0 Test accuracy: 0.9736

Epoc: 20 Train accuracy: 1.0 Test accuracy: 0.9736

Epoc: 21 Train accuracy: 0.98 Test accuracy: 0.9735

Epoc: 22 Train accuracy: 1.0 Test accuracy: 0.9736

Epoc: 23 Train accuracy: 1.0 Test accuracy: 0.9745

Epoc: 24 Train accuracy: 1.0 Test accuracy: 0.9758

Epoc: 25 Train accuracy: 1.0 Test accuracy: 0.9758

Epoc: 26 Train accuracy: 1.0 Test accuracy: 0.9756

Epoc: 27 Train accuracy: 1.0 Test accuracy: 0.9757

Epoc: 28 Train accuracy: 1.0 Test accuracy: 0.977

Epoc: 29 Train accuracy: 0.98 Test accuracy: 0.9762

Epoc: 30 Train accuracy: 1.0 Test accuracy: 0.9773

Epoc: 31 Train accuracy: 1.0 Test accuracy: 0.977

Epoc: 32 Train accuracy: 1.0 Test accuracy: 0.9769

Epoc: 33 Train accuracy: 0.98 Test accuracy: 0.9773

Epoc: 34 Train accuracy: 0.98 Test accuracy: 0.9758

Epoc: 35 Train accuracy: 0.98 Test accuracy: 0.978

Epoc: 36 Train accuracy: 0.98 Test accuracy: 0.9787

Epoc: 37 Train accuracy: 0.98 Test accuracy: 0.9779

Epoc: 38 Train accuracy: 1.0 Test accuracy: 0.9785

Epoc: 39 Train accuracy: 1.0 Test accuracy: 0.978

with tf.Session() as sess:

saver.restore(sess, "./my_model_final.ckpt") # or better, use save_path

X_new_scaled = mnist.test.images[:20]

Z = logits.eval(feed_dict={X: X_new_scaled})

y_pred = np.argmax(Z, axis=1)

print("Predicted classes:", y_pred)

print("Actual classes: ", mnist.test.labels[:20])

from IPython.display import clear_output, Image, display, HTML

def strip_consts(graph_def, max_const_size=32):

"""Strip large constant values from graph_def."""

strip_def = tf.GraphDef()

for n0 in graph_def.node:

n = strip_def.node.add()

n.MergeFrom(n0)

if n.op == 'Const':

tensor = n.attr['value'].tensor

size = len(tensor.tensor_content)

if size > max_const_size:

tensor.tensor_content = "<stripped %d bytes>"%size

return strip_def

def show_graph(graph_def, max_const_size=32):

"""Visualize TensorFlow graph."""

if hasattr(graph_def, 'as_graph_def'):

graph_def = graph_def.as_graph_def()

strip_def = strip_consts(graph_def, max_const_size=max_const_size)

code = """

<script>

function load() {{

document.getElementById("{id}").pbtxt = {data};

}}

</script>

<link rel="import" href="https://tensorboard.appspot.com/tf-graph-basic.build.html" onload=load()>

<div style="height:600px">

<tf-graph-basic id="{id}"></tf-graph-basic>

</div>

""".format(data=repr(str(strip_def)), id='graph'+str(np.random.rand()))

iframe = """

<iframe seamless style="width:1200px;height:620px;border:0" srcdoc="{}"></iframe>

""".format(code.replace('"', '"'))

display(HTML(iframe))

show_graph(tf.get_default_graph())

74.3. Using dense() instead of neuron_layer()#

Note: the book uses tensorflow.contrib.layers.fully_connected() rather than tf.layers.dense() (which did not exist when this chapter was written). It is now preferable to use tf.layers.dense(), because anything in the contrib module may change or be deleted without notice. The dense() function is almost identical to the fully_connected() function, except for a few minor differences:

several parameters are renamed:

scopebecomesname,activation_fnbecomesactivation(and similarly the_fnsuffix is removed from other parameters such asnormalizer_fn),weights_initializerbecomeskernel_initializer, etc.the default

activationis nowNonerather thantf.nn.relu.a few more differences are presented in chapter 11.

n_inputs = 28*28 # MNIST

n_hidden1 = 300

n_hidden2 = 100

n_outputs = 10

reset_graph()

X = tf.placeholder(tf.float32, shape=(None, n_inputs), name="X")

y = tf.placeholder(tf.int64, shape=(None), name="y")

with tf.name_scope("dnn"):

hidden1 = tf.layers.dense(X, n_hidden1, name="hidden1",

activation=tf.nn.relu)

hidden2 = tf.layers.dense(hidden1, n_hidden2, name="hidden2",

activation=tf.nn.relu)

logits = tf.layers.dense(hidden2, n_outputs, name="outputs")

with tf.name_scope("loss"):

xentropy = tf.nn.sparse_softmax_cross_entropy_with_logits(labels=y, logits=logits)

loss = tf.reduce_mean(xentropy, name="loss")

learning_rate = 0.01

with tf.name_scope("train"):

optimizer = tf.train.GradientDescentOptimizer(learning_rate)

training_op = optimizer.minimize(loss)

with tf.name_scope("eval"):

correct = tf.nn.in_top_k(logits, y, 1)

accuracy = tf.reduce_mean(tf.cast(correct, tf.float32))

init = tf.global_variables_initializer()

saver = tf.train.Saver()

n_epochs = 20

n_batches = 50

with tf.Session() as sess:

init.run()

for epoch in range(n_epochs):

for iteration in range(mnist.train.num_examples // batch_size):

X_batch, y_batch = mnist.train.next_batch(batch_size)

sess.run(training_op, feed_dict={X: X_batch, y: y_batch})

acc_train = accuracy.eval(feed_dict={X: X_batch, y: y_batch})

acc_test = accuracy.eval(feed_dict={X: mnist.test.images, y: mnist.test.labels})

print(epoch, "Train accuracy:", acc_train, "Test accuracy:", acc_test)

save_path = saver.save(sess, "./my_model_final.ckpt")

show_graph(tf.get_default_graph())